What is Federated Learning?

Federated Learning is a decentralized machine learning paradigm. Instead of harvesting data from users, we send the model to the data. This allows for massive-scale collaborative learning without compromising user privacy or violating data residency laws.

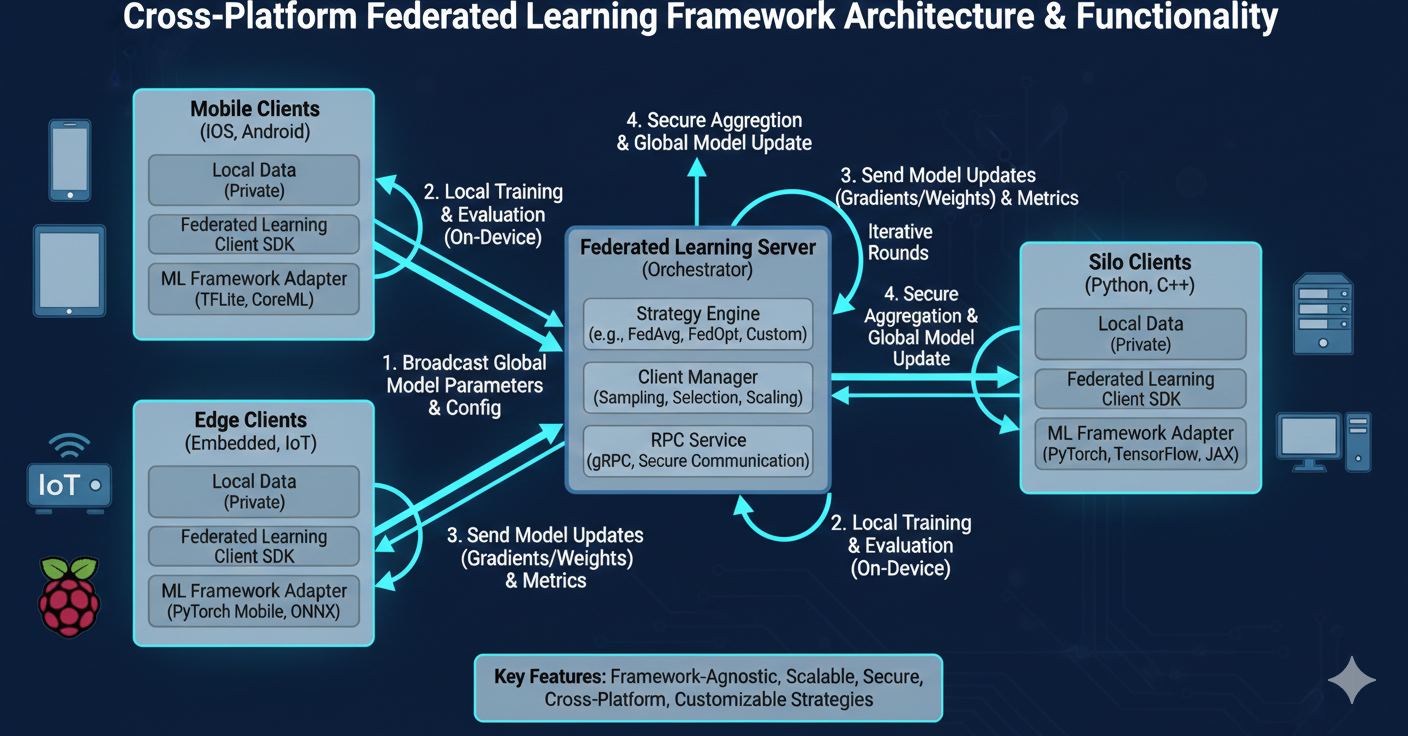

System Architecture

Our architecture orchestrates training through a central coordinator and distributed clients across four stages: **Selection**, **Training**, **Aggregation**, and **Update**. The central server never sees raw data—only model parameter updates.

Core Capabilities

Flexible Strategies

Support for diverse aggregation algorithms like FedAvg and FedProx.

Client Management

Built-in handling for millions of concurrent edge devices and IoT nodes.

Framework Agnostic

Native compatibility with PyTorch, TensorFlow, and JAX workflows.

Modular SDK

Lightweight deployment footprint optimized for mobile and edge environments.

Enterprise Scalability

Scale from laboratory simulations to massive production edge fleets seamlessly.

Research-Ready

Easily test and implement new privacy-preserving protocols and optimizers.

Privacy-Enhancing Technologies

Secure Aggregation

Parameter updates are cryptographically masked so the server only sees the aggregate sum, never individual user contributions.

Differential Privacy

Mathematical noise injection ensures that the presence of any single individual's data cannot be inferred from the final model.