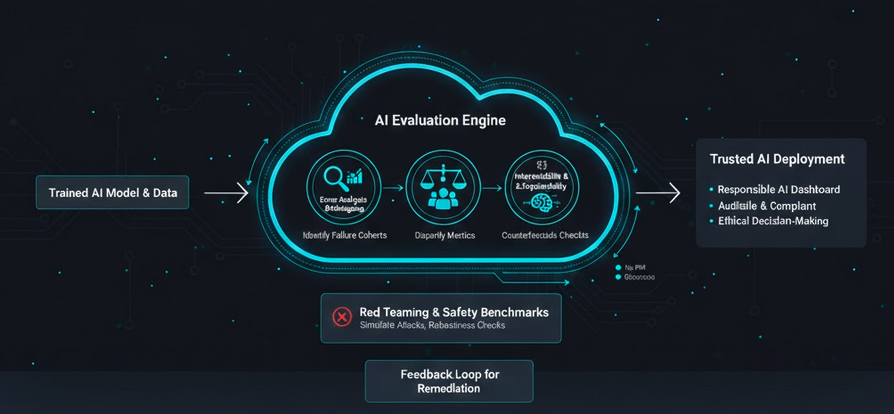

Holistic Model Assessment

Evaluating AI today requires more than just measuring accuracy. It demands a deep understanding of Model Debugging and Decision Making. Our framework provides a centralized suite to assess how models perform across different data cohorts.

By integrating technical error analysis with human-centric interpretability, we transform AI from a "black box" into a transparent, auditable asset ready for mission-critical deployment.

The Trusted AI Toolkit

Error Analysis

Go beyond aggregate metrics. Automatically identify data cohorts with the highest error rates and visualize specific feature contributions to failure.

Fairness Metrics

Assess and mitigate disparities across sensitive cohorts (age, gender, ethnicity) using industry-standard parity constraints and fairness auditing.

Model Interpretability

Generate global and local explanations for model predictions using SHAP and counterfactuals to understand the "Why" behind every decision.

Counterfactual Analysis

Explore "What-if" scenarios by observing how minimal changes to input features could flip a model's outcome for actionable end-user insights.

Causal Inference

Distinguish between correlation and causation. Estimate the real-world effect of interventions rather than just predicting historical patterns.

Safety & Red Teaming

Simulate adversarial attacks and edge cases to stress-test robustness against jailbreaking, hallucinations, and data poisoning.

Integrative Safety Architecture

The Feedback Loop

Our architecture bridges the gap between training and deployment. By generating Responsible AI Dashboards, teams can iterate on model performance while compliance officers review safety scorecards.

Data-Driven Debugging

Instead of manual inspection, our framework uses machine learning to find "blind spots" in your training data, highlighting where more representative samples are needed.

Ethical Market Impact

Financial Lending

Ensure credit scoring models don't inadvertently discriminate based on proxies while providing clear reasons for loan rejections.

Predictive Healthcare

Validate that diagnostic algorithms perform equitably across different patient populations to prevent systemic healthcare disparities.

Recruitment & HR

Audit automated screening tools for hidden biases in resume parsing, ensuring a fair and merit-based evaluation of all candidates.